Imagine opening your phone to see a video of yourself saying and doing things you never did. Your face, your voice—but the actions are a complete fiction. This unsettling scenario is no longer science fiction; it’s a digital reality facing public figures today.

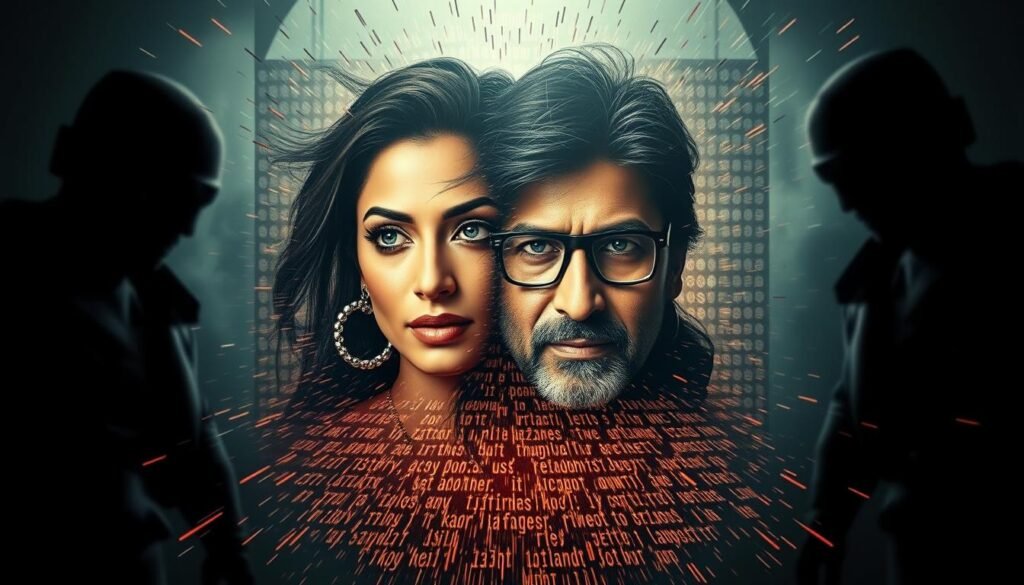

We are witnessing a pivotal moment where two of India’s most beloved actors have taken a historic stand. This couple is confronting a new frontier of digital infringement, moving beyond traditional privacy concerns to challenge the very misuse of their identity.

Their legal action, filed in early September, seeks significant damages and aims to establish crucial protections. This YouTube lawsuit represents more than a personal grievance. It is a battle for control in the age of artificial intelligence.

The case highlights a critical gap in Indian law regarding “personality rights.” As Deepfake videos become easier to create, the potential for harm multiplies exponentially. This legal challenge questions the adequacy of current platform policies.

We see this as a landmark case for the entire Bollywood industry and beyond. It forces us to consider how we protect individual dignity in our rapidly evolving digital world.

Understanding the Deepfake Scandal and Its Origins

Technology’s democratization brought unforeseen consequences as accessible AI tools enabled widespread identity manipulation. We witnessed how sophisticated platforms transformed simple text prompts into convincing visual content that blurred reality’s boundaries.

Incident Overview and Timeline

The scandal centered around a specific channel that systematically produced hundreds of manipulated videos. This platform uploaded content featuring fabricated scenarios that accumulated staggering million views.

Despite court interventions in early September, hundreds of similar videos remained accessible. The persistence of this content demonstrated systematic enforcement challenges across digital platforms.

How AI-Generated Content Sparked the Lawsuit

The technical process revealed disturbing simplicity. Creators used text prompts with artificial intelligence platforms to generate initial images. They then transformed these into convincing video content within minutes.

This workflow demonstrated how accessible deepfake technology had become. The rapid creation and distribution of such material created immediate reputational concerns alongside long-term archival risks.

Aishwarya Rai, Abhishek Bachchan, YouTube lawsuit, Deepfake videos, Bollywood – A Legal Battle Unfolds

Comprehensive court filings demonstrate how personality protection now requires multi-layered legal strategies. We see this case setting new standards for digital rights enforcement.

Court Filings and Delhi High Court Involvement

The legal team submitted an extensive 1,500-page petition to the delhi high court on September 6. This documentation targeted both digital infringements and unauthorized physical merchandise.

The court responded swiftly, ordering the removal of 518 specific links in early September. This immediate action acknowledged the financial and reputational harm caused by the violations.

Google’s legal representatives must submit written responses before the January 15 hearing. The delhi high continues to oversee this complex digital rights case.

Intellectual and Personality Rights Challenges

India lacks explicit statutory protection for personality rights, unlike American jurisdictions. This creates significant challenges for public figures seeking identity protection.

The case builds upon the 2023 precedent involving actor Anil Kapoor. That decision recognized protection for image, voice, and signature phrases.

This legal action seeks to establish robust intellectual property rights frameworks for the digital age. The outcome could transform how platforms handle AI-generated content featuring real individuals.

Implications for Bollywood and Social Media Regulation

At the heart of this legal confrontation lies a sophisticated understanding of AI’s cascading effects on digital identity misuse. We recognize how infringing content can trigger exponential multiplication through artificial intelligence training cycles.

Impact on Bollywood’s Image and Celebrity Rights

The entertainment industry faces unprecedented challenges. When misleading content gets used train AI models, it creates a dangerous feedback loop. These systems learn from biased information.

This creates a potential multiply effect where single violations generate thousands of derivative problems. The industry needs robust frameworks to prevent this cascade.

Policy Changes and Platform Accountability

Current platform policies show significant gaps. The option to share content for training third-party AI systems lacks proper safeguards. This creates accountability challenges.

We see the need for upstream prevention mechanisms. Platforms must develop better systems to stop infringing material from being used train future AI models. This requires new governance approaches.

The case highlights how training data quality directly impacts AI system outputs. Proper controls can prevent the potential multiply of digital rights violations across the ecosystem.

Looking Ahead: Safeguarding Celebrity Rights in the Age of AI

As we stand at this technological crossroads, the protection of individual identity becomes paramount in our digital ecosystem. The January 15 hearing represents a critical milestone that will likely shape legal precedents for years to come.

Despite court-ordered takedowns of specific links, enforcement challenges persist. Hundreds of similar manipulated materials remain accessible, demonstrating the complexity of digital rights protection.

Legal experts suggest judicial outcomes may focus on policy improvements rather than direct platform liability. Potential solutions include expedited response systems for celebrity claimants.

We envision comprehensive solutions requiring multi-stakeholder collaboration. This includes legislative action, platform policy innovations, and technological safeguards.

The outcome will reverberate through India’s entertainment industry for years. It represents a significant step toward balancing creative expression with robust personality rights protection.